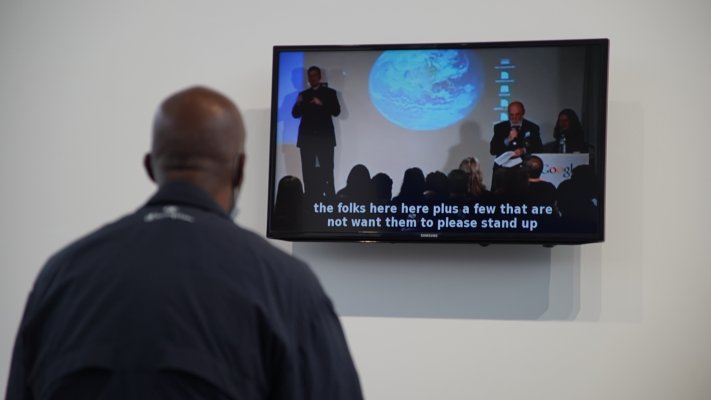

Excess Ability, 2014

Digital video (color, sound) displayed on monitor; vinyl logo; stools; and artificial potted plant

Running time: 7 minutes, 16 seconds, looped

Courtesy of the artist

“Voice recognition algorithms are intended to recognize sounds and translate them into words and symbols; image recognition algorithms try to recognize which objects appear in a picture. I’m interested in the story behind these algorithms. What does the algorithm understand? And what does it miss? And how do these algorithms change our view of the world?”

—Lior Zalmanson

On November 19, 2009, Google announced that its YouTube service would now offer automatic captions by integrating with a type of voice recognition technology called automatic speech recognition (ASR). The launch event was celebratory: after the deaf engineer Ken Harrenstein screened a short clip of Google’s CEO that had been captioned using ASR, he signed, “We did it! This is finally it! We got it!” Ironically, Harrenstein pointed to the mistakes in the transcription as proof that it had been generated by a machine and not a human.

Lior Zalmanson (he/him/his), whose art explores the normally opaque algorithms created by tech companies, performed a simple experiment: he used Google’s own captioning software to create captions of the video of its announcement. Unsurprisingly, the transcription is full of mistakes. For example, “accessibility” becomes “excess ability,” giving the work its title. More than merely demonstrating the limitations of captioning technology (which has greatly improved since 2009), the video makes a profound observation: the “abilities” of both machines and disabled people are always measured against the abilities of “normal” humans—and are often found lacking. As more algorithms are created to increase accessibility (or “excess ability”) for disabled people, how will their coding define what is “normal,” and what role will disabled communities play in their creation?